Yes yes, #deepdream. But as Memo Akten and others point out, this is going to kitsch as rapidly as Walter Keane and lolcats unless we can find a way to stop the massive firehose of repetitive #puppyslug that has been opened by a few websites letting us upload selfies. I don't think we should stop at puppyslug (and its involved intermediary layers), but training a separate neural network turns out to be more technically difficult for most artists. I believe applying machine learning in content synthesis is a wide open frontier in computational creativity, so let's please do what we can to save this emerging aesthetic from its puppyslug typecast. If we can get over the hurdle of training brains, and start to apply inceptionism to other media (vector based 2D visuals, video clips, music, to name a few) then the technique might diversify into a more dignified craft that would be way harder to contain within a single novelty hashtag.

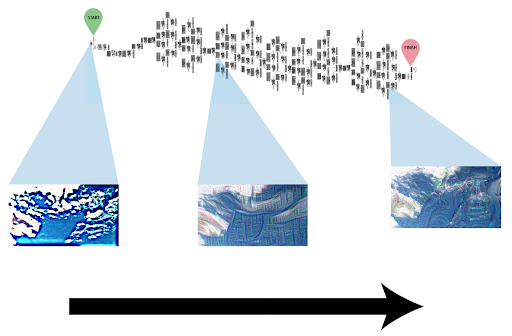

Let's talk about this one brain everyone loves. It's a bvlc_googLeNet trained on ImageNet, provided on the caffe model zoo. That's the one that gives us puppyslug because it has seen so many dogs, birds, and pagodas. It's also the one that gives you the rest of the effects offered by dreamscopeapp because they're just poking the brain in other places besides the very end. Again, even the deluxe options package is going to get old fast. I refer to this caffemodel file as the puppyslug brain. Perhaps the reason for all the doggies has to do with the number of dog pictures in ImageNet. Shortly following is a diagram of the images coming from different parts of this neural network. You can imagine its thought process like a collection of finely tuned photoshop filters, strung together into a hierarchical pipeline. Naturally, the more complex stuff is at the end.

My goal in this post is to show you some deepdream images that were done with neural networks trained on other datasets – data besides the the entirety of ImageNet. I hope that these outcomes will convince you that there's more to it, and that the conversation is far from over. Some of the pre-trained neural nets were used un-altered from the Caffe Model Zoo, and others were ones I trained just for this exploration.

It's important to keep in mind that feeding the neural net next to nothing results in just as extravagant of output as feeding it the The Sistine Chapel. It is the job of the artist to select a meaningful guide image, whose relationship to the training set is of interesting cultural significance. Without that curated relationship, all you have is a good old computational acid trip.

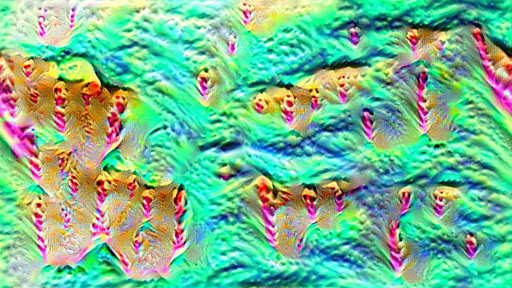

The following image is a chromatic gradient guiding a deep-dream by a GoogLeNet trained on classical Western fine art history up to impressionism, using crawled images from Dr. Emil Krén's Web Gallery of Art. This version uses photometric distortion to prevent over-fitting. I think it results in more representational imagery. The image is 2000x2000 pixels, so download it and take a closer look in your viewer of choice.

This one is the same data, but the training set did not contain the photometric distortions. The output still contains representational imagery.

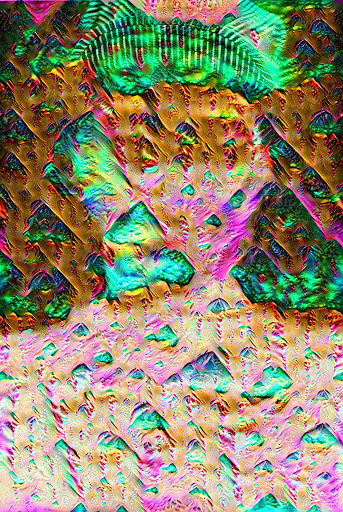

The below image is a neural network trained to do gender classification, deepdreaming about Bruce Jenner, on the cover of Playgirl Magazine in 1982. Whether or not Bruce has been properly gender-classified may be inconsequential to the outcome of the deepdream image.

Notice that when gender_net is simply run on a picture of clouds, you still see the lost souls poking out of Freddy Krueger's belly.

Gender_net deepdreaming Untitled A by Cindy Sherman (the one with the train conductor's hat).

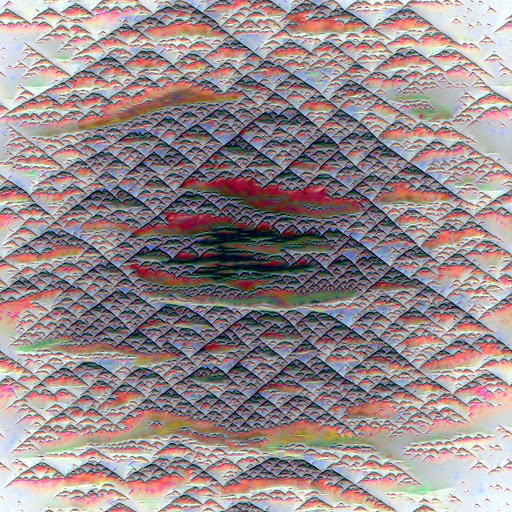

This was a more intermediary layer from deep-dreaming a neural network custom trained to classify various labeled faces in the wild (LFW).

This was dreamt by the same neural net, but using a different gradient to guide it. The resulting image looks like Pepperland.

This is the same face classifier (innocently trying to tell Taylor Swift apart from Floyd Mayweather) guided by a linear gradient. The result is this wall of grotesque faces.

Just for good measure, here's hardcore pornography, deep-dreamt by that same facial recognition network, but with fewer fractal octaves specified by the artist.

Training neural networks turned out to be easier than I expected, thanks to public AMIs and nvidia digits. Expect your AWS bill to skyrocket. Particularly if you know about machine learning, it helps to actually read the GoogLeNet publication. In the section called Training Methodology, that article mentions photometric distortions by Andrew Howard. This is important not to overlook. When generating the distortions, I used ImageMagick and python. You can also generate the photometric distortions on the fly with this Caffe fork.

If you want to bake later inception layers without getting a sizing error, go into deploy.prototxt and delete all layers whose name begins with loss. In nvidia digits, the default learning rate policy is Step Down but bvlc_GoogLeNet used Polynomial Decay with a power of 0.5. I can't say that one is necessarily better than the other since I don't even know that properly training the neural net to classify successfully has anything to do with its effectiveness in synthesizing a deepdream image.

The highest resolution image I could train on the greatest ec2 instance turned out to be 18x18 inches at 300 dots per inch. Any more than that and I would need more than 60 gb of RAM. If anyone has access to such a machine, I would gladly collaborate. I also seek to understand why my own training sets did not result in such clarity of re-sythesis in the dreams. It's possible I simply did not train for long enough, or maybe the fine tweaking of the parameters is a matter more subtle. Please train me!